The ability to detect and understand other minds is critical for our physical and social survival. The big mystery is how our brains do this --that is, how do we tell a "Who" from a "What"?

To find out, we created doll-human continuua (click here to see a movie example of our stimuli). We wanted to know where, along these continuua, the face starts to seem alive.

THE TIPPING POINT

We found a consistent tipping point: a face had to be at least 65% human before people would say that it was more human than doll. Note that this is significantly different than 51% human -- the mathematical tipping point for a 0-100 continuum. People are stringent about what counts as alive. The same tipping point occurred whether people were asked if the face "had a mind", "could form a plan," or was "able to experience pain", indicating that recognizing life in a face is tantamount to recognizing the capacity for a mental life. Looser & Wheatley (2010)

Looser & Wheatley (2010). Psych Science. Featured in "News of the Week", Science, 331,19.

THE EYES HAVE IT

"I looked for the moment when the face looked back"

It turns out that finding another mind requires scrutinizing one particular facial feature: the eyes. Seeing a single eye was enough for participants to judge the presence of life. In contrast, an equal-sized view of the nose, lips, and a patch of skin were far less useful (Looser & Wheatley, 2010). Indeed, as one participant put it "I looked for the moment when the face looked back at me." This suggests that the age-old aphorism is correct: we consider eyes to be "the windows to the soul."

This natural perceptual scrutiny --for fine ocular cues that convey a mental life --may help explain why eyes are the achilles heel of computer graphics imagery.

FACE - THEN - MIND

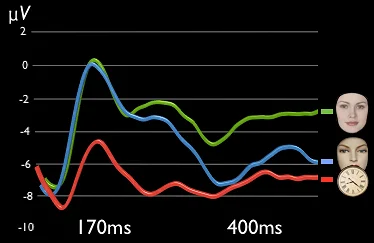

Although we may be experts at detecting a mind in a face, this visual scrutiny takes longer than detecting a face. Face detection is associated with an increased electrocortical response to faces about 170ms after the image first hits the retina. This well-documented, face-specific response occurs for all kinds of faces -- dolls, humans, cartoons, cats. At this early stage, any face will do. (in the graph see how the blue and green lines are overlapping at 170ms).

However, give the brain a few hundred more milliseconds and only the human faces sustain a heightened response. (in the graph, see how the blue and green lines diverge by 400ms). This tells us that once a face is detected, the brain continues to scrutinize that face for evidence of a mind. Wheatley, Weinberg, Looser, Moran, & Hajcak (2011).

DUAL EFFICIENCY

These two stages of face perception -- 1) Detect all faces 2) Filter out false alarms -- is remarkably efficient. It ensures the rapid liberal face detection necessary for survival (better to false alarm to a face-like rock pattern than miss a foe) while ensuring that we do not waste precious cognitive resources on the false alarms. We may notice a mannequin but we will not waste our energy trying to read its thoughts.